Practical guides on Catboost

What exactly is Catboost? How does it differ from LightGBM and XGBoost?

🚀 Subscribe to us for deep-dive content in DS, ML or AI! 🚀

Table of content

Quick summary

Concepts for the algorithm

General and Catboost-specific parameters

Practical considerations

More resources

Quick summary

Catboost is another powerful library for gradient tree boosting, similar to LightGBM and XGBoost.

Compared to other boosting implementations (LightGBM and XGBoost), its special features are:

Order boosting (a slightly modified gradient boosting algorithm)

Advanced categorical variable handling

Feature combination

Symmetric trees

We will elaborate on these concepts in the following sessions.

Concepts for the algorithm

The following assumes that you are already familiar with how gradient boosting works. Otherwise for a brief review, please visit Concepts of boosting algorithms in machine learning.

Order boosting

One source of overfitting — Repeated use of the same dataset for each boosting iteration can lead to overfitting and hence prediction shift.

In many gradient boosting implementations (such as LightGBM and XGBoost), the whole training dataset is used to compute residuals, gradients or hessians based on the intermediate model from the previous boosting iterations. This repeated use of the same data points is one of the sources for overfitting.

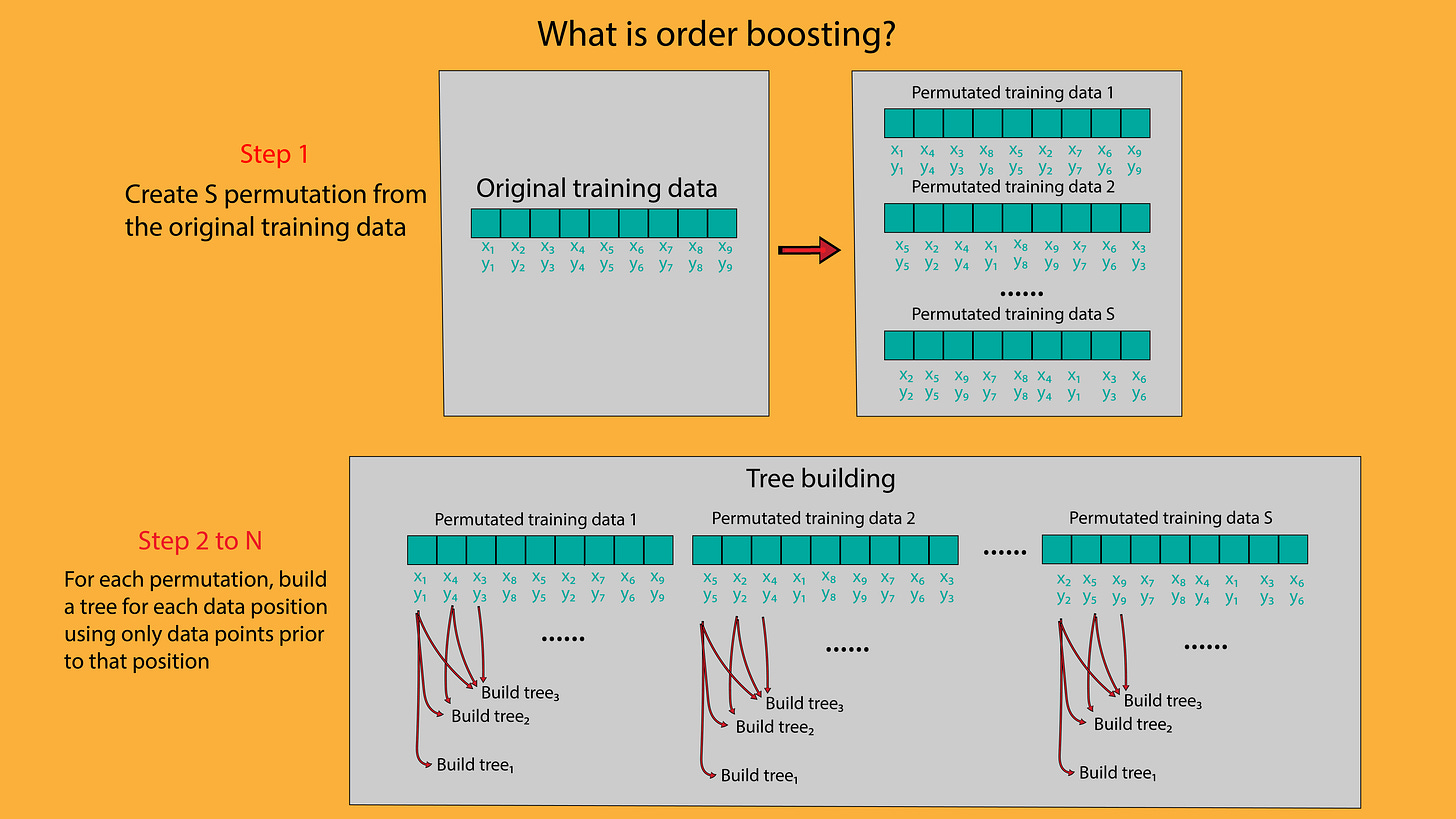

Order boosting — a technique to build trees in each iteration by permutating training samples.

To mitigate this problem, Catboost uses order boosting.

First, the original training dataset is randomly permutated for S times. At each iteration, Catboost build a list of new trees for each data position i, using only data point before the position i.

This conceptual implementation scales with O(SN^2), where N is the number of data points. This is not practical for large datasets. In reality, Catboost applies order boosting when the number of training data is small. In addition, instead of maintaining a list of tree for all data position, it maintains a list from position i = 1, … , log(n) instead. This significantly reduces the complexity to O(SN) instead.

Advanced categorical variable handling

The implementation in Catboost to handle categorical variables are more elaborated than its counterparts.

Catboost supports several techniques to handle categorical variables, such as target encoding with ordering and feature combination.

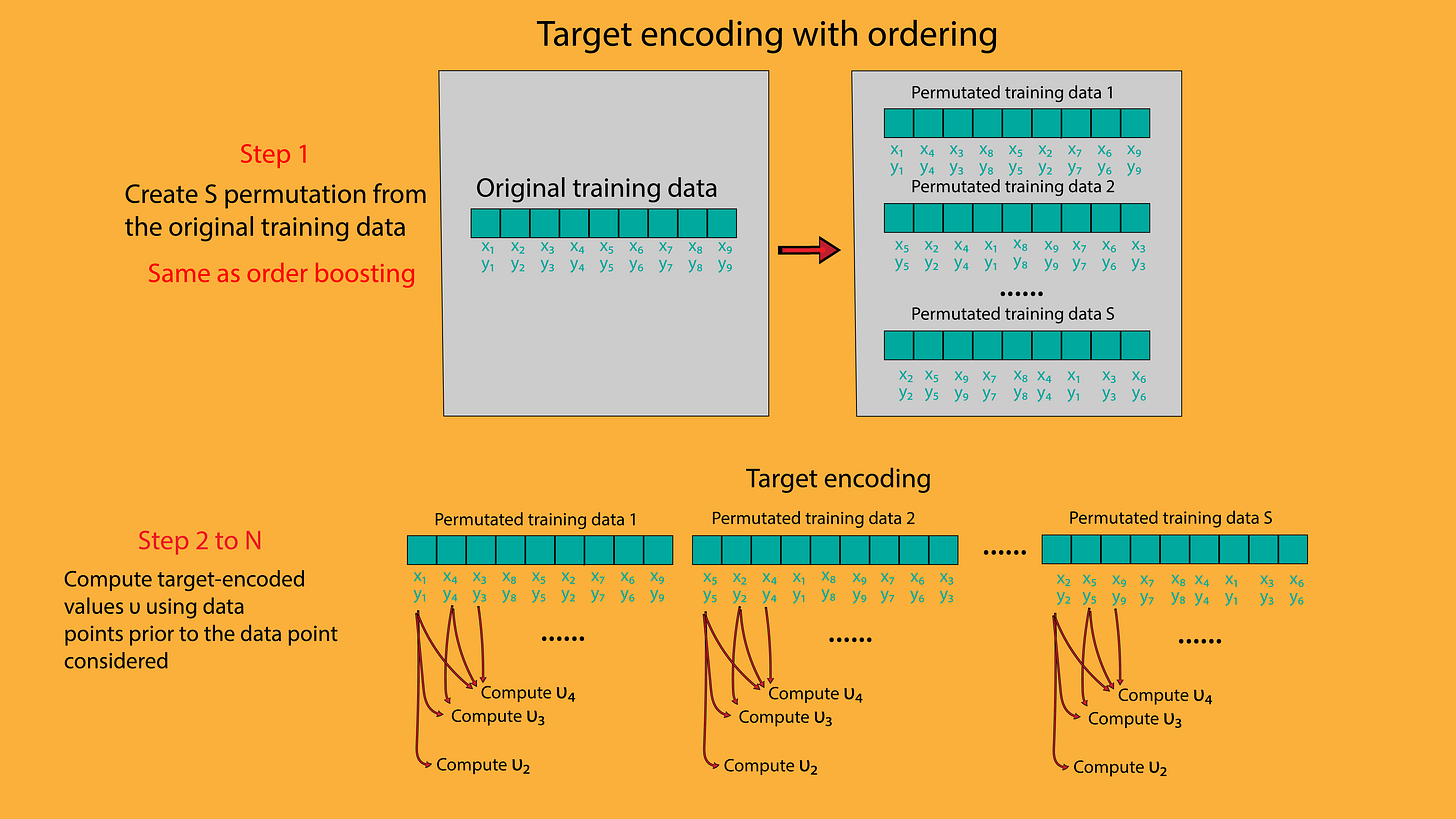

Target encoding with ordering — One of the ways to handle categorical variables are by target encoding. However, this technique often leads to overfitting as the label values are used directly in feature preparation. Similar to the technique of order boosting in the previous section, Catboost provides a solution to this problem by computing target-encoded values (usually the average target values for a particular category) using the data points prior to the position considered, as shown in the figure below.

Feature combination — In addition, Catboost also combines different categorical features. For example, if there are two categorical features in the dataset: pet (with value as dog and cat) and color (with value as white, and black), Catboost would make new categories such as white dog, white cat, black dog, black cat. It is important to note that Catboost performs the categorical combination at each boosting iteration and dynamically adds categorical combination incrementally to avoid sparsity.

Feature combination allows more complex combinations of categorical values while maintaining reasonable training speed.

Symmetric trees

Unlike XGBoost and LightGBM, Catboost supports the feature to use symmetric trees as its base predictors. At each depth, Catboost ensures that all leaf nodes uses the same splitting rule. One advantage of this implementation is that this provides a faster inference speed. It also further avoids overfitting by constructing trees with simpler structures.

Symmetric trees — the splitting condition is the same at each depth

Advanced bootstrapping strategy

Compared to LightGBM and XGBoost, Catboost supports a wider range of bootstrapping strategies during tree building, which includes

Bayesian, Bernoulli, MVS, Poisson

One can also define the sampling unit and sampling frequency.

On the other hand, LightGBM and XGBoost support

Bagging, GOSS (For a description on GOSS, please visit here)

Uniform, Gradient-based

respectively.

Parameters

General parameters (similar to LightGBM and XGBoost)

Catboost supports various parameters to control and tune training processes specific to boosting.

These parameters have similar counterparts in other implementations (LightGBM, XGBoost), and their meanings are easily understood given a basic understanding of gradient boosting (Please visit here for a deep-dive into gradient boosting).

The most common ones are as follows:

loss_function — the training objective for the problem

learning_rate — the numerical factor multiplied to each predictor for each boosting iteration

iterations — total number of boosting round to be performed.

random_seed — initial random seed used for training.

l2_leaf_reg — L2 regularization term during tree building

subsample — bagging rate during tree building, used when the bootstrap type is Poisson, Bernoulli and MVS.

colsample_bylevel — fraction of features to be used during each tree split.

min_data_in_leaf — minimum number of data required in each leaf.

max_leaves — maximum number of leaves

grow_policy — a parameter to choose between

SymmetricTree (All leaves from previous depth are splitted with the same condition)

Depthwise (All non-terminal leaves are splitted with different conditions)

Lossguide (The non-terminal leaf with the best loss improvement is split)

leaf_estimation_method — The method used to calculate the prediction from a leaf. It is a parameter to choose between:

Newton

Gradient

Exact

Specific parameters to Catboost

bootstrap_type

Catboost supports a wide variety of sampling methods during splitting. These methods control the weights used for each training sample at each split, This can partly reduce the effect of overfitting during training.

Specifically, Catboost supports the following parameters:

Bayesian — the weight of each training sample is calculated by:

\(w = (-\log{\phi})^t\)where φ is a random number sampled from the uniform distribution [0,1]. The parameter t is the bagging temperature and can be defined by setting bagging_temperature in Catboost.

Bernoulli — this is the typical bagging method also used in LightGBM and XGBoost. The rate of sampling is defined by the parameter subsample as described in the previous section

MVS — Similar to GOSS in LightGBM, this is a bagging method which samples training samples based on gradient values. For more detail about this complex algorithm, please visit the paper here.

Poisson — the weight of each training sample is calculated by:

No

Please note that one can also control the frequency of sampling with the parameter sampling_frequency:

PerTree — weights are determined at each construction of new tree

PerTreeLevel — weights are determined at each split

nan_mode

This is a parameter to choose the strategy to handle missing data during training. While there are default ways to handle missing data in LightGBM, in Catboost we can choose explicitly this strategy.

In particular the following options are provided:

Forbidden — does not allow missing value, and raise error when encounter so.

Min — put all missing values during a split to the left side

Max — put all missing values during a split to the right side

boosting_type

This is a parameter to choose the boosting algorithm.

In particular, one can choose between:

Ordered — Choosing this will enable Order Boosting (as explained in the previous sessions)

Plain — This will enable the classic gradient boosting scheme.

Practical considerations

Catboost is in general slower but performs better with small datasets, and categorical variable handling is more elaborated.

Compared to LightGBM and XGBoost, the speed of Catboost is generally slower. With small datasets, where the processing speed is not the primary concern, using Catboost with Order Boosting enabled could give a better performance.

In addition, Catboost can give significant advantages over its counterparts in situations which the effects of feature interactions are significant. It can also be a good choice with training data with complex categorical variables.

Conclusion

In many ways, Catboost is similar to LightGBM and XGBoost. In many ways, it is different because there are additional features built in this package that its counterparts do not have. This article highlights these differences and hope to help you feel more comfortable when using this package. Stay tuned for our next deep-dive article!

More resources

Videos on Catboost

🚀 Subscribe to us for deep-dive content in DS, ML or AI! 🚀